Beta-binomial distribution

| Probability mass function |

|

| Cumulative distribution function |

|

| Parameters | n ∈ N0 — number of trials (real) (real) (real) (real) |

|---|---|

| Support | k ∈ { 0, …, n } |

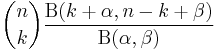

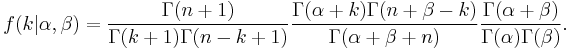

| PMF |  |

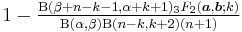

| CDF |  where 3F2(a,b,k) is the generalized hypergeometric function =3F2(1, α + k + 1, −n + k + 1; k + 2, −β − n + k + 2; 1) |

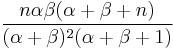

| Mean |  |

| Variance |  |

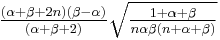

| Skewness |  |

| Ex. kurtosis | See text |

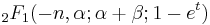

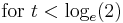

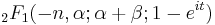

| MGF |   |

| CF |   |

In probability theory and statistics, the beta-binomial distribution is a family of discrete probability distributions on a finite support of non-negative integers arising when the probability of success in each of a fixed or known number of Bernoulli trials is either unknown or random. It is frequently used in Bayesian statistics, empirical Bayes methods and classical statistics as an overdispersed binomial distribution.

It reduces to the Bernoulli distribution as a special case when n = 1. For α = β = 1, it is the discrete uniform distribution from 0 to n. It also approximates the binomial distribution arbitrarily well for large α and β. The beta-binomial is a one-dimensional version of the multivariate Pólya distribution, as the binomial and beta distributions are special cases of the multinomial and Dirichlet distributions, respectively.

Contents |

Motivation and derivation

Beta-binomial distribution as a compound distribution

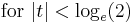

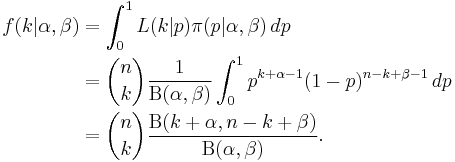

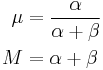

The Beta distribution is a conjugate distribution of the binomial distribution. This fact leads to an analytically tractable compound distribution where one can think of the  parameter in the binomial distribution as being randomly drawn from a beta distribution. Namely, if

parameter in the binomial distribution as being randomly drawn from a beta distribution. Namely, if

is the binomial distribution where p is a random variable with a beta distribution

then the compound distribution is given by

Using the properties of the beta function, this can alternatively be written

It is within this context that the beta-binomial distribution appears often in Bayesian statistics: the beta-binomial is the predictive distribution of a binomial random variable with a beta distribution prior on the success probability.

Beta-binomial as an urn model

The beta-binomial distribution can also be motivated via an urn model for positive integer values of α and β. Specifically, imagine an urn containing α red balls and β black balls, where random draws are made. If a red ball is observed, then two red balls are returned to the urn. Likewise, if a black ball is drawn, it is replaced and another black ball is added to the urn. If this is repeated n times, then the probability of observing k red balls follows a beta-binomial distribution with parameters n,α and β.

Note that if the random draws are with simple replacement (no balls over and above the observed ball are added to the urn), then the distribution follows a binomial distribution and if the random draws are made without replacement, the distribution follows a hypergeometric distribution.

Moments and properties

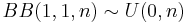

The first three raw moments are

and the kurtosis is

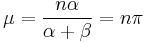

Letting  we note, suggestively, that the mean can be written as

we note, suggestively, that the mean can be written as

and the variance as

where  is the pairwise correlation between the n Bernoulli draws and is called the over-dispersion parameter.

is the pairwise correlation between the n Bernoulli draws and is called the over-dispersion parameter.

Point estimates

Method of moments

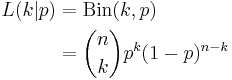

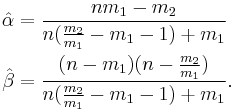

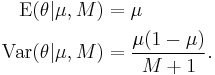

The method of moments estimates can be gained by noting the first and second moments of the beta-binomial namely

and setting these raw moments equal to the sample moments

and solving for α and β we get

Note that these estimates can be non-sensically negative which is evidence that the data is either undispersed or underdispersed relative to the binomial distribution. In this case, the binomial distribution and the hypergeometric distribution are alternative candidates respectively.

Maximum likelihood estimation

While closed-form maximum likelihood estimates are impractical, given that the pdf consists of common functions (gamma function and/or Beta functions), they can be easily found via direct numerical optimization. Maximum likelihood estimates from empirical data can be computed using general methods for fitting multinomial Pólya distributions, methods for which are described in (Minka 2003). The R package VGAM through the function vglm, via maximum likelihood, facilitates the fitting of glm type models with responses distributed according to the beta-binomial distribution. Note also that there is no requirement that n is fixed throughout the observations.

Example

The following data gives the number of male children among the first 12 children of family size 13 in 6115 families taken from hospital records in 19th century Saxony (Sokal and Rohlf, p. 59 from Lindsey). The 13th child is ignored to assuage the effect of families non-randomly stopping when a desired gender is reached.

| Males | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| Families | 3 | 24 | 104 | 286 | 670 | 1033 | 1343 | 1112 | 829 | 478 | 181 | 45 | 7 |

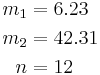

We note the first two sample moments are

and therefore the method of moments estimates are

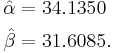

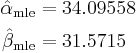

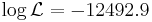

The maximum likelihood estimates can be found numerically

and the maximized log-liklihood is

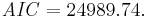

from which we find the AIC

The AIC for the competing binomial model is AIC = 25070.34 and thus we see that the beta-binomial model provides a superior fit to the data i.e. there is evidence for overdispersion. Trivers and Willard posit a theoretical justification for heterogeneity in gender-proneness among families (i.e. overdispersion).

The superior fit is evident especially among the tails

| Males | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| Observed Families | 3 | 24 | 104 | 286 | 670 | 1033 | 1343 | 1112 | 829 | 478 | 181 | 45 | 7 |

| Predicted (Beta-Binomial) | 2.3 | 22.6 | 104.8 | 310.9 | 655.7 | 1036.2 | 1257.9 | 1182.1 | 853.6 | 461.9 | 177.9 | 43.8 | 5.2 |

| Predicted (Binomial p = 0.519215) | 0.9 | 12.1 | 71.8 | 258.5 | 628.1 | 1085.2 | 1367.3 | 1265.6 | 854.2 | 410.0 | 132.8 | 26.1 | 2.3 |

Further Bayesian considerations

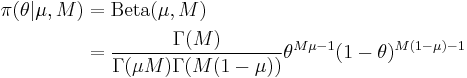

It is convenient to reparameterize the distributions so that the expected mean of the prior is a single parameter: Let

where

so that

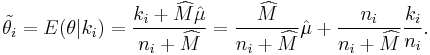

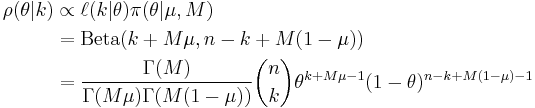

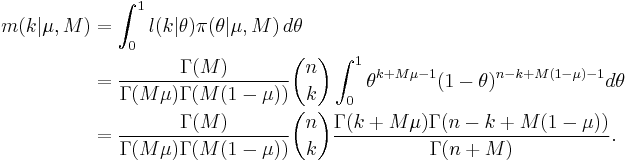

The posterior distribution ρ(θ|k) is also a beta distribution:

And

while the marginal distribution m(k|μ, M) is given by

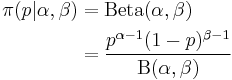

Because the marginal is a complex, non-linear function of Gamma and Digamma functions, it is quite difficult to obtain a marginal maximum likelihood estimate (MMLE) for the mean and variance. Instead, we use the method of iterated expectations to find the expected value of the marginal moments.

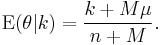

Let us write our model as a two-stage compound sampling model. Let ki be the number of success out of ni trials for event i:

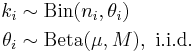

We can find iterated moment estimates for the mean and variance using the moments for the distributions in the two-stage model:

(Here we have used the law of total expectation and the law of total variance.)

We want point estimates for  and

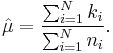

and  . The estimated mean

. The estimated mean  is calculated from the sample

is calculated from the sample

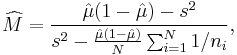

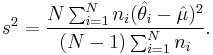

The estimate of the hyperparameter M is obtained using the moment estimates for the variance of the two-stage model:

Solving:

where

Since we now have parameter point estimates,  and

and  , for the underlying distribution, we would like to find a point estimate

, for the underlying distribution, we would like to find a point estimate  for the probability of success for event i. This is the weighted average of the event estimate

for the probability of success for event i. This is the weighted average of the event estimate  and

and  . Given our point estimates for the prior, we may now plug in these values to find a point estimate for the posterior

. Given our point estimates for the prior, we may now plug in these values to find a point estimate for the posterior

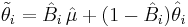

Shrinkage factors

We may write the posterior estimate as a weighted average:

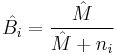

where  is called the shrinkage factor.

is called the shrinkage factor.

Related distributions

where

where  is the discrete uniform distribution.

is the discrete uniform distribution.

See also

References

* Minka, Thomas P. (2003). Estimating a Dirichlet distribution. Microsoft Technical Report.

External links

- Using the Beta-binomial distribution to assess performance of a biometric identification device

- Fastfit contains Matlab code for fitting Beta-Binomial distributions (in the form of two-dimensional Pólya distributions) to data.

![\begin{align}

\mu_1 & =\frac{n\alpha}{\alpha%2B\beta} \\[8pt]

\mu_2 & =\frac{n\alpha[n(1%2B\alpha)%2B\beta]}{(\alpha%2B\beta)(1%2B\alpha%2B\beta)}\\[8pt]

\mu_3 & =\frac{n\alpha[n^{2}(1%2B\alpha)(2%2B\alpha)%2B3n(1%2B\alpha)\beta%2B\beta(\beta-\alpha)]}{(\alpha%2B\beta)(1%2B\alpha%2B\beta)(2%2B\alpha%2B\beta)}

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/9bbde6832a781e986ce57c1fdb4f3063.png)

![\gamma_2 = \frac{(\alpha %2B \beta)^2 (1%2B\alpha%2B\beta)}{n \alpha \beta( \alpha %2B \beta %2B 2)(\alpha %2B \beta %2B 3)(\alpha %2B \beta %2B n) } \left[ (\alpha %2B \beta)(\alpha %2B \beta - 1 %2B 6n) %2B 3 \alpha\beta(n - 2) %2B 6n^2 -\frac{3\alpha\beta n(6-n)}{\alpha %2B \beta} - \frac{18\alpha\beta n^{2}}{(\alpha%2B\beta)^2} \right].](/2012-wikipedia_en_all_nopic_01_2012/I/f80bfb00adc4433112a8c0bc0fb4a3a2.png)

![\sigma^2 = \frac{n\alpha\beta(\alpha%2B\beta%2Bn)}{(\alpha%2B\beta)^2(\alpha%2B\beta%2B1)}

= n\pi(1-\pi) \frac{\alpha %2B \beta %2B n}{\alpha %2B \beta %2B 1} = n\pi(1-\pi)[1%2B(n-1)\rho]

\!](/2012-wikipedia_en_all_nopic_01_2012/I/ff75239ce34d84a03622c17bf2cb1b4d.png)

![\begin{align}

\mu_1 & =\frac{n\alpha}{\alpha%2B\beta} \\

\mu_2 & =\frac{n\alpha[n(1%2B\alpha)%2B\beta]}{(\alpha%2B\beta)(1%2B\alpha%2B\beta)}

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/1710572cee56fe7ff0e84644f45588ba.png)

![\operatorname{E}\left(\frac{k}{n}\right) = \operatorname{E}\left[\operatorname{E}\left(\left.\frac{k}{n}\right|\theta\right)\right] = \operatorname{E}(\theta) = \mu](/2012-wikipedia_en_all_nopic_01_2012/I/448407b2a4035ea318cd215583cdbece.png)

![\begin{align}

\operatorname{var}\left(\frac{k}{n}\right) & =

\operatorname{E}\left[\operatorname{var}\left(\left.\frac{k}{n}\right|\theta\right)\right] %2B

\operatorname{var}\left[\operatorname{E}\left(\left.\frac{k}{n}\right|\theta\right)\right] \\

& =

\operatorname{E}\left[\left(\left.\frac{1}{n}\right)\theta(1-\theta)\right|\mu,M\right] %2B

\operatorname{var}\left(\theta|\mu,M\right) \\

& =

\frac{1}{n}\left(\mu(1-\mu)\right) %2B \frac{n_{i}-1}{n_{i}}\frac{(\mu(1-\mu))}{M%2B1} \\

& =

\frac{\mu(1-\mu)}{n}\left(1%2B\frac{n-1}{M%2B1}\right).

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/3cfd0952e2187396d4b087c50f84854c.png)

![s^2 = \frac{1}{N} \sum_{i=1}^N \operatorname{var}\left(\frac{k_{i}}{n_{i}} \right)

= \frac{1}{N} \sum_{i=1}^N \frac{\hat{\mu}(1-\hat{\mu})}{n_i}

\left[1%2B\frac{n_i-1}{\widehat{M}%2B1}\right]](/2012-wikipedia_en_all_nopic_01_2012/I/d95a8caae103b588250a35ac82ac6d70.png)